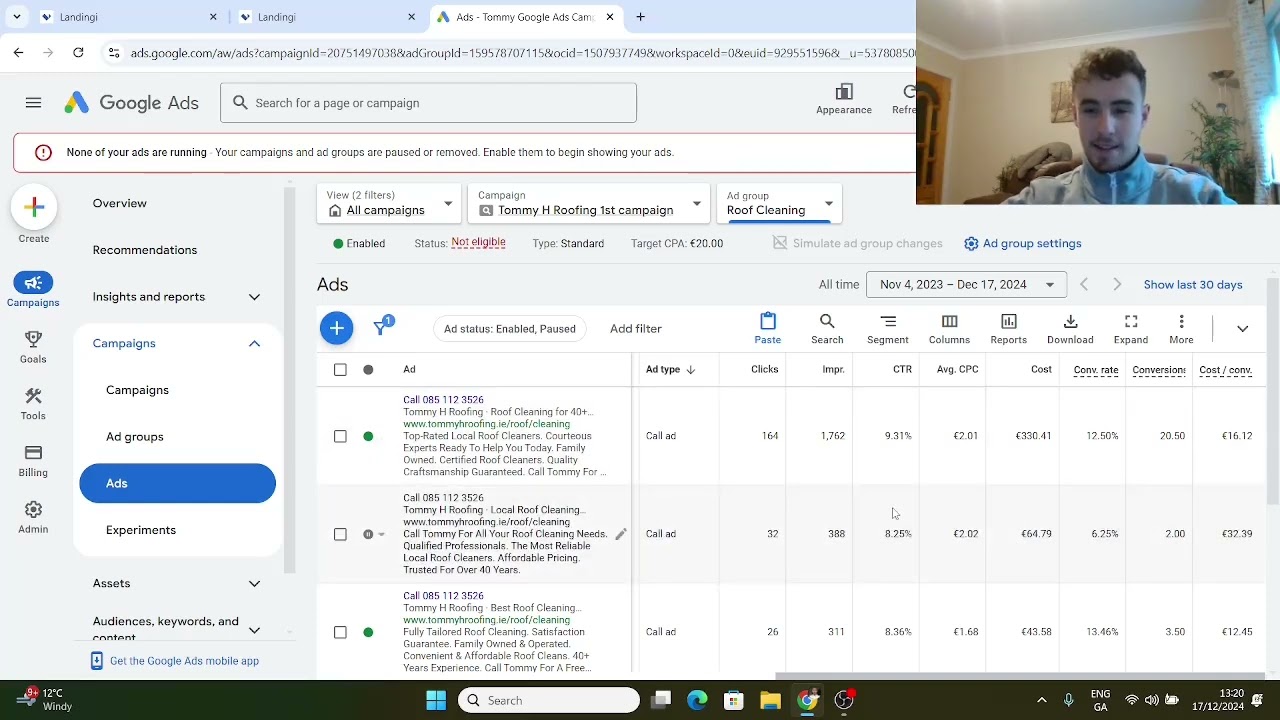

Wasting budget on Google Ads that don’t deliver is frustrating, especially when you’re not sure what’s holding your campaigns back. I’ve seen many advertisers struggle to pinpoint which headlines or calls-to-action actually drive results, and it’s easy to feel stuck when performance stalls.

That’s where structured copy testing comes in. In this article, I’ll break down how to run disciplined, data-driven copy tests—so you can isolate what really works, boost your click-through and conversion rates, and make every ad dollar count.

You’ll get a step-by-step guide to setting up tests, tips for avoiding common pitfalls, and best practices for scaling your efforts across larger accounts. I’ll also cover how to handle Responsive Search Ads, analyze your results with confidence, and tie your findings directly to business growth.

If you want to stop guessing and start making measurable improvements in your Google Ads, you’ll find clear, actionable strategies here. Let’s get started on making your ad copy work harder for you.

Understanding Copy Tests Google Ads

Copy tests in Google Ads aren’t about hoping your words will work; they’re structured, data-driven experiments designed to show which ad text—like headlines, descriptions, or calls-to-action—actually delivers for your specific metric. The secret? You change just one thing at a time, so it’s crystal clear what’s making the difference.

This approach is all about never settling, always chasing improvements by going straight to the campaign data. Every test needs a clear goal—maybe you’re aiming to lift click-through rates (CTR), or perhaps conversions are the prize.

But here’s where it gets interesting: “winning” depends entirely on the metric you pick. Suppose you’re running a campaign for professional education. One ad nudges CTR up by 5% but leaves conversions flat, while another keeps CTR steady yet boosts conversions by 14%. Picking the right focus really does shift everything.

How Copy Tests Work

So, how does a copy test actually run?

Start by choosing a single element to change—just the headline, for example—keeping the rest identical. Build a set of ad variants with only that element different.

Run these ads side by side within the same ad group or campaign. That gives you a fair, “like for like” comparison.

Google Ads streamlines the process with Ad Variations and Experiments to split your traffic. Responsive Search Ads even mix elements using machine learning to highlight the winners.

How To A/B Test Your Ad Copy In Google Ads

Tests need 2–4 weeks for reliable data—never cut them short, change too many elements, or judge from too few impressions. This kind of discipline powers ongoing, objective improvement.

Why copy testing matters for Google Ads campaigns

Spending on Google Ads without copy testing is like shouting into the void—there’s no telling what works. Structured copy testing changes that. It sharpens campaign performance, safeguards budgets, and ensures every headline or description is proven by actual data.

Business Impact and Performance Gains

With focused copy tests, you can see CTR boosts of up to 30% and conversions lift by 15–25%, especially when tweaking headlines or calls-to-action.

These aren’t just vanity figures. Higher CTR and stronger ad relevance feed Google’s Quality Score, unlocking better ad positions, higher visibility, and, in tougher markets, up to 50% lower CPC.

There’s another upside: ongoing testing helps you keep pace with Google’s changes. From new automation to evolving Responsive Search Ads, steady testing helps you scale winners quickly.

Google has confirmed it is running an experiment that automatically rewrites ad copy in live campaigns, a development that directly impacts how advertisers must approach messaging control and performance testing.

Common Pain Points Resolved

Here’s the pitfall: random optimisation means you don’t know what’s driving results, allowing wasted spend to slip in. With RSAs, automation can even mask what’s actually working.

Proper, data-driven testing makes everything clear. Weak performers get dropped, feedback is direct, and you can adapt quickly—keeping spending sharp and campaigns efficient, even as Google’s rules evolve.

How to set up and run ad copy tests in Google Ads: A step-by-step guide

Planning Your Test

Start with a clear goal and control your variables closely.

- Set a single objective

Choose whether you want to boost CTR, conversions, or cut CPA. - Choose high-traffic, stable ad groups

A good volume of impressions is essential for meaningful data. - Keep settings, budgets, and landing pages unchanged

This keeps your results from being swayed by something out of your test’s control. - Test just one element at a time

Whether it’s the headline, description, or call-to-action, isolate your focus.

Creating Variants and Launching

This is where careful setup pays off.

- Create simple variants

Adjust only one copy element; everything else stays matched. - Use Google Experiments or Ad Variations

Handy tools for a smooth split test. - Set ad rotation to ‘Do not optimise: Rotate ads indefinitely’

Ensures equal exposure for each variant. - Avoid holidays, promos, or web changes

Any big event or update can muddy your findings.

Monitoring and Measuring Results

Patience is your ally here—don’t rush the data.

- Let the test run 2–4 weeks or for 100–200 conversions/clicks.

- Review variants side by side in Google Ads dashboards

Easy tracking is a must. - Look for 95%+ statistical confidence

Free tools or built-in features will spot if there’s a real winner. - Only take action after hitting confidence

No shortcuts—wait for clear proof.

Troubleshooting & Common Pitfalls

- Not enough data

Extend testing or raise your budget. - Testing too many things

Simplicity means trustworthy insights. - Outside factors

Watch for web changes or competitor moves distracting results.

Quick-Start Checklist

- Define one goal

- Pick high-traffic groups

- Change one copy element

- Rotate ads evenly

- Keep things stable

- Run until data threshold

- Confirm 95%+ confidence

- Roll out winner or retest

Best practices for effective copy testing in Google Ads

Controlling Variables and Test Structure

Getting consistent, trustworthy results from copy tests rests on tightly controlling variables. If you want to be certain what’s moving the needle in your Google Ads, test just one copy element—maybe the headline, call-to-action, or description—and leave all other factors like landing pages and targeting absolutely unchanged.

Your ad variants should run simultaneously, side by side in the same ad group. There’s a crucial lever here: set ad rotation to “Do not optimise: Rotate ads indefinitely.” This creates a genuinely fair contest for every variant.

Why be so strict? If you change multiple elements or mess with budgets or targeting, you’ll never know which tweak worked. It’s like changing three ingredients in a recipe and not knowing which one made dinner better. For clarity, target the big-impact copy elements—headlines, calls-to-action, and clear value propositions are proven drivers for higher click-through and more conversions.

To stop guessing which ads work best, you must follow a proven methodology. A core principle is isolating a single variable in each test. If you change both the headline and the description at the same time, you won't know which element was responsible for the change in performance, making the results unreliable.

Keep budgets, targeting, and all campaign settings absolutely fixed—your copy is the only thing that should change.

Maximising Learning and Scale

Here’s a tip that changes everything: keep a simple, central record of each ad copy test. Note the purpose, specific variants, timeframes, and the results. This log stops you from repeating yourself, and quickly points the team to what’s worked (or bombed) in the past.

Find a winning variant? Roll it out to relevant ad groups or campaigns. Treat every winner as your new “control”—that’s how you fuel continuous, step-by-step progress.

Before launching a test, define your success metric—maybe it’s CTR, conversions, or lead quality. Shape your copy to aim for this metric, using language that directly pre-qualifies your audience. That way, your data directly lines up with your actual business goals.

Analyzing copy test results: Metrics, significance, and actionable insights

Essential Metrics to Track

So, your copy test wraps up—now what? Tracking the right metrics gives you clarity.

Start with Click-Through Rate (CTR)—it shows if your ad is eye-catching, especially helpful when you’re testing headlines or core messages. If conversions are what you’re after, Conversion Rate reveals which ad is actually driving action. Then there’s Cost per Acquisition (CPA), highlighting whether those results are cost-effective.

Here’s something people often overlook: Impression Share and Top-of-Page Rate help ensure every variant gets fair exposure. Without these checks, your test won’t be truly unbiased. Quality Score is subtle but powerful—it affects your costs and ad positioning, so keep an eye on how it trends.

Pick your top metrics based on campaign goals: for awareness, focus on CTR and impression share; for leads or sales, watch conversion rate and CPA; for overall efficiency, track Quality Score.

Statistical Significance and Decision Frameworks

Don’t rush to deem a winner. Wait for 1,000 impressions, 100 clicks, or 50 conversions—depending on your primary metric.

Confirm results with a significance test; you want at least 95% confidence. Make sure ads had equal exposure for fairness. Keep aware of external factors (seasonal trends, website changes) that may impact results.

A common mistake is to stop a test as soon as it reaches statistical significance. This is a problem because the results can fluctuate over time, and the longer you run a test, the more you can average out the noise from external factors.

Choose the ad variant that truly supports your business goal. Update your groups and ready that next test—growth is all about learning and iterating.

| Metric | What It Measures | Benchmark Threshold |

|---|---|---|

| CTR | Ad engagement | 1,000+ impressions, 100+ clicks |

| Conversion Rate | Post-click success | 100+ clicks, 50+ conversions |

| CPA | Cost per acquisition | 50+ conversions |

| Impression Share | Exposure fairness | Match across ads |

| Quality Score | Ad/keyword relevance | Monitor as a trend |

Responsive Search Ads: Challenges and best practices for copy testing

Key Challenges of RSAs for Copy Testing

Working with Responsive Search Ads (RSAs) can feel like trying to solve a puzzle where the pieces keep shifting. Google mixes up to 15 headlines and 4 descriptions, serving different combinations every time someone searches.

You simply can’t run a straightforward A/B test—there’s no way to say exactly which mix will appear. RSAs introduce unpredictability, which complicates measuring impact.

Although Google provides asset-level data for impressions and clicks, there’s a catch. Most conversions come after someone sees a blend of headlines and descriptions, so you can’t credit a specific asset for that win. To uncover real patterns, you need at least 1,000 impressions per asset. Low-traffic or unstable ad groups make it nearly impossible to get trustworthy trends—anything less, and your insights are up in the air.

Modern Solutions and Best Practices

So, what works best in this ever-shifting landscape? Pinning assets (locking copy into fixed positions) helps meet compliance or branding needs, but overdoing it limits Google’s optimisation and can lower your Ad Strength.

A smarter approach is to compare pinned and unpinned asset sets side by side to see which moves your key metrics. Group your assets by messaging themes—urgency, benefits, or proof—and track which group actually wins attention.

If you have a high-traffic group, review asset performance regularly, replace weak assets, and keep a log of every change with your reasoning. Don’t depend on Google’s ratings like “Best” or “Learning”—cross-check with major campaign metrics such as conversion rate and CPA.

Keeping thorough records and making iterative data-driven changes turns RSAs from guesswork into a repeatable engine for optimisation. That’s how you steadily grow campaign performance, month after month.

For true data-driven optimization of Responsive Search Ads, prioritize asset labels, which reflect actual performance based on impressions, over the 'Ad Strength' score, which is simply a predictive best-practice metric from Google.

Scaling and automating copy tests in larger Google Ads accounts

Scaling copy tests across a big Google Ads account? That’s where the real challenges start. With lots of ads and sprawling campaigns, you hit new risks. Automation slip-ups, lost documentation, and creative fatigue all creep in once you take things up a notch.

Scaling Strategies: Tools, Tactics, and Pitfalls

Google’s built-in tools—like Experiments, ad customisers, and IF functions—let you run many tests quickly. But here’s the catch: more automation means new pitfalls if you’re not paying attention.

- Campaign-level experiments

Launch controlled tests across ad groups. Beware: different traffic or markets can easily tip your results off-balance. - Ad customisers and feeds

Feeds power personalised ads at scale, like city-specific hotel rates. Regular quality checks are critical—broken feeds mean generic or incorrect ads. - IF functions/templates

Fine-tune copy for device or audience. The downside? Overusing templates leads to bland, repetitive ads—so schedule content reviews for variety.

Automation brings scale but isn’t set-and-forget. Scripts and rules help manage volume but must be monitored closely—small errors can pause high performers or let weak ads slip through.

Automation, Efficiency, and Documentation Best Practices

- Scripts for variant management

Automate variant rotation, but don’t let errors tank your best ads. - Automated rules for governance

Use metric-based routines but avoid being so rigid that you lose potential winners. - Centralised documentation

Keep thorough test logs in team-shared tools to capture every insight.

Blend automation with human oversight and strong records. That’s how scalable copy testing delivers real, lasting campaign growth.

Avoiding common pitfalls in copy testing

Testing too many elements or rushing decisions

Only test one copy element at a time—just the headline, call-to-action, or description—while keeping everything else constant. If you change more than one thing, you’ll never know what actually worked. Google Ads Experiments keeps your variables in check.

Let tests run for at least two weeks or until you hit 1,000 impressions or 100 conversions. If you accidentally mix variables, restart with a cleaner setup.

Ignoring statistical significance

Tempted to call a winner early? That’s how bad decisions happen. Wait for 1,000 impressions, 100 clicks, or 50 conversions per variant. Use Google's reporting or a significance calculator to check if you’ve genuinely found a winner. Stopped too early? Just rerun or extend your test.

Focusing on the wrong metrics

A big spike in CTR might look great, but that doesn’t always drive more sales. It’s far smarter to focus on conversions, CPA, or ROAS—those outcome-based metrics are what truly matter for your goals.

Clinging to outdated practices

Sticking with old ad formats and outdated routines holds your campaigns back. Keep watch for Google Ads updates and move towards Responsive Search Ads and asset-based reporting. Staying current unlocks new features and better results.

Ongoing improvement: Iteration, learning, and tying copy tests to business growth

Strategies for Continuous Testing and Learning

Building true momentum in Google Ads isn’t about one big change—it’s all about making copy testing a routine habit. Weekly or monthly check-ins help you quickly spot underperformers or signs of creative fatigue before they hurt results.

Set yourself a quarterly rhythm for refreshing ad variants. Why? Because audience tastes and market dynamics shift fast. Keeping a detailed record of each test—the variants, timings, and all your wins and losses—stops you from making the same mistake twice and becomes a goldmine for future tweaks.

AI-Powered Content Strategy

See the AI platform that's replacing entire content teams (with better results).

Every test winner should become your new control, pushing your results up one step at a time. Think of it as climbing a ladder, each test helping you reach new heights. Using this iterative, data-first approach means your messaging always stays in sync with what your audience wants—no more guesswork or sticking with stale messages.

Optimising Landing Pages and Content

It’s not just about ad copy—landing pages play a starring role in conversions. Matching headlines, offers, and visuals creates consistency and trust, which drives more people to act.

And those copy test insights? Feed them right back into your landing page updates to keep everything aligned. For teams aiming to grow fast while safeguarding quality, managed services like SEOSwarm offer ongoing site and blog optimisation, adapting content as your Google Ads campaigns evolve.

If you need to launch new content fast, Blog-in-one-minute delivers a rapid, SEO-guided blog setup—helping you support ever-changing ads with high-converting, timely content. Keeping your site and ads iterating together is the secret to lasting growth and ROI.

Staying current: Navigating Google Ads platform and policy updates

How to Stay Up-to-Date and Compliant

Miss a Google Ads update and your campaign can grind to a halt—suddenly, ad disapprovals, missing features, or suspension become real headaches. With stricter rules landing all the time, staying ahead really matters.

- Check Google Ads Policy Center monthly

Early alerts reveal rule changes and stop surprises. - Subscribe to official updates

Google’s release notes and notifications highlight every new tweak or feature. - Automate compliance checks

Scripts or plugins flag policy issues in your copy straight away. - Audit ads after each major update

Review all copy tests and landing pages, and update documentation to stay compliant.

Customer Match, targeting, and data consent are changing quickly—so always keep your consent process up to date.

When something shifts, pause and rework affected ads, tracking every change for your records.

Regular quarterly reviews keep your team in sync and compliance sharp, making copy testing sustainable—even as Google’s rules evolve.

Getting Results with Copy Tests in Google Ads

Most advertisers waste budget by guessing what works, but disciplined copy testing turns every campaign into a learning engine. The real edge comes from testing one copy element at a time, tracking the right metrics, and letting data—not hunches—guide your next move.

If you want to see lasting gains, here’s my advice: set a single goal, keep your variables tight, and run each test until you hit statistical confidence. Document every result, roll out your winners, and make copy testing a habit—not a one-off.

Google Ads will keep changing, but your process should keep evolving, too. The advertisers who win are those who treat every test as a step forward, not just a box to tick. Progress isn’t about luck—it’s about disciplined iteration, one headline at a time.

- Wil